What would happen at Harvard or Yale if a prof gave a surprise test in March on work covered in October? Everyone knows what would happen; that's why they don't do it.

John Holt, How Children Fail. Entry written October 30, 1958.

Post-COVID Learning Losses

Children face potentially permanent setbacksHeadline in Harvard Magazine. Published July 17th, 2023.

A meta-analysis of data conducted in the mid-nineties by Harris Cooper at the University of Missouri, which is still taken as the standard reference, concluded that students lost at least a month of learning every summer, with the fall-off of math knowledge and spelling skills being particularly pronounced. The study also found that results were worse for children in lower socio-economic groups than for middle- or upper-middle-class children. In learning, as in other spheres, the poor lost the most.

What to make of such results? Modifying the school calendar to shorten the summer break—and to implement longer year-round breaks—would be a common-sense response.

Rebecca Mead, The Cost of New York’s Summer Slide, May 29, 2015.

Our educational system here in the U.S. has this weird quirk where students are allowed to spend several months not studying, followed immediately by several more months of not studying, followed by fifty more years of not studying. I speak, of course, of the period immediately following graduation.

If school disruptions, summer breaks, or six months of studying something different can cause students to forget a good chunk of what they’ve learned, what happens after their formal education ends? How much remains after three months? After three years?

It would not surprise John Holt to learn that this question is under-studied relative to its importance. We instinctively expect the answer to be horrifying and unfixable. But many brave souls have made an effort. An excellent 2008 review paper by Eugene Custers looked at more than thirty studies, published between 1923 and 2003, and found reason to believe we may be being overly pessimistic. Custers comments

On second thoughts, this may not be really surprising, because if the popular belief were true, then formal education, including basic science education in medicine, would be ‘‘a colossal waste of time’’ (Ellis et al. 1998).

(I love that there’s a citation there.)

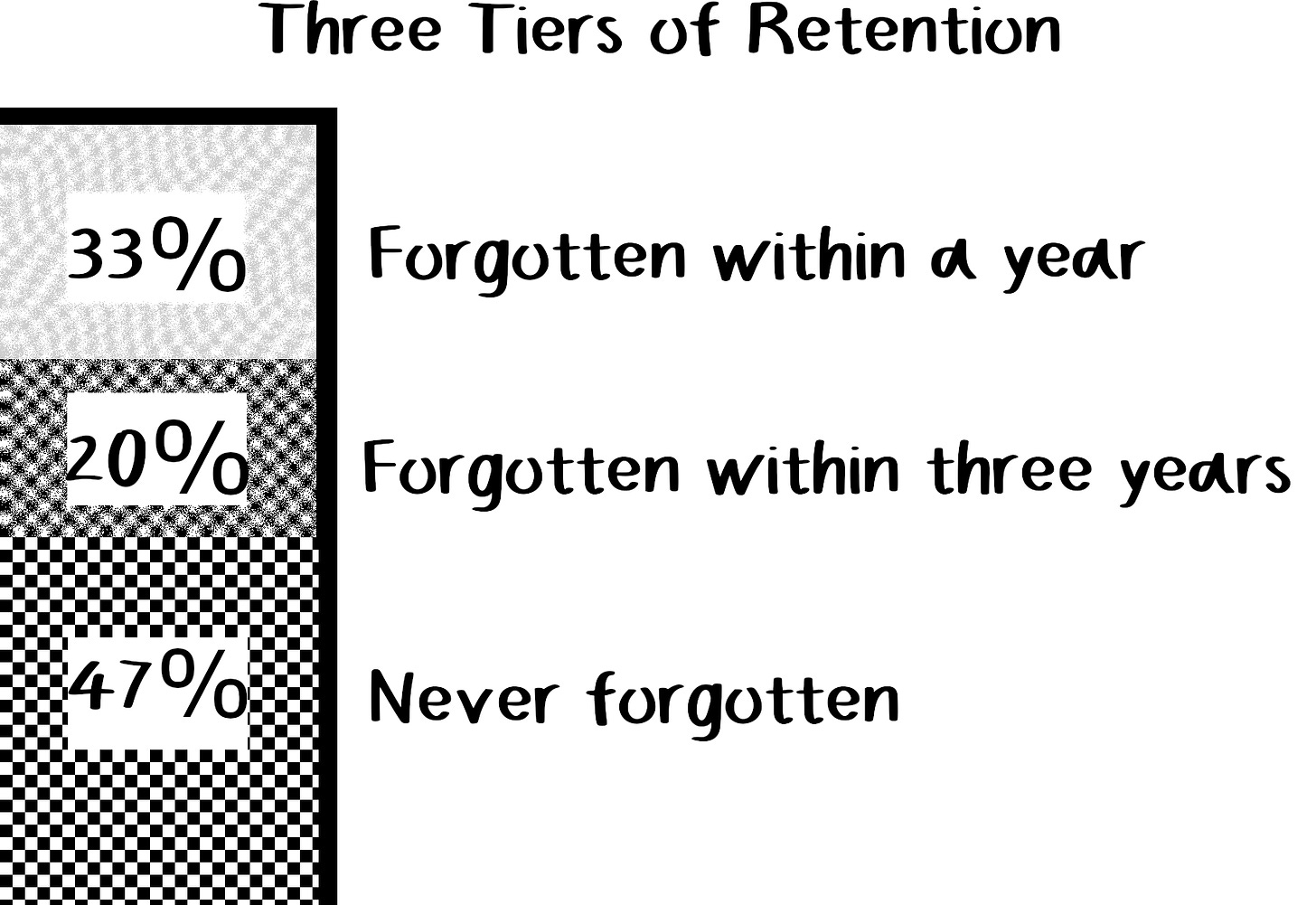

The studies were all conducted over wildly different conditions, and unsurprisingly show wildly different results, but they do seem to gesture in the direction of a common model. Overall, here’s my rough attempt to summarize Custers’s summary:

(There’s also a secret fourth tier, for knowledge that you immediately start putting to practical use, for which there’s close to 100% retention.)

It’s heartening that if you still remember something three years after the last time it was relevant to your life, you’ll probably remember it as long as anything else. Custers says it looks like your confidence in what you remember decreases with time, but that you’re mostly underestimating yourself.

What Goes In What Tier?

This remains controversial, but the prevailing view (both academic and common-sense) seems to be something like this:

The worst tier, averaging about one-third of school, is stuff we learn through one-off cram sessions for a test the next day. It gets filed the same way we file perishable facts, like “the weather forecast says it’s going to rain tomorrow.”

The middle tier is stuff we learn through spaced repetition—study it for a weekly test, study it again for a midterm, study it again for a final, say. That stuff gets filed the same way we file facts that are often useful in our day-to-day life, like train schedules that don’t change often. It’s got a long half-life—we’ve all got those orphaned facts seemingly randomly preserved long past their expiration date.

The bottom tier, a little under half of school, is stuff we learn in depth, as part of a larger framework—the sort of thing you can’t easily put on flashcards, but also you don’t need to, because the interconnected nature of what you’re studying naturally leads to spaced repetition. This stuff is even more robust, because everything reinforces each other. To paraphrase Yudkowsky, knowledge becomes truly part of you if, even if you forgot it, you could figure it out from what you do remember.

Let’s assume for the sake of this article that we care about this, that we want to improve retention for knowledge that isn’t immediately relevant or useful. Having students “learn” something they’ll forget within months is, indeed, ‘‘a colossal waste of time’’ (Ellis et al. 1998). Problem is, this is harder to test within a useful timeframe. If you give a test on something your students just learned, you can tell whether they learned it or not, but not which tier it ended up in. If you wait eight years and then do a followup test, you’ll know, but it’ll be mostly too late to be applicable. You’ll have different students, a different curriculum, a different environment. And it won’t be any use at all to your ex-students.

Happily, though, these results suggest that the tiers are somewhat discrete, meaning that how much you remember after 3 months is predictive of how much you’ll remember after a year, and how much you remember after 3 years is predictive of how much you’ll remember after a decade. That’s a timeframe that allows for feedback! Any math a student forgets over summer break is likely to be math they’d have forgotten anyway, as soon as they stopped using it, so a test given soon after summer break tells us a lot more than one given right before. And a test given to seniors on their freshman curriculum tells us even more.

Knowledge is power (otherwise school would be a colossal etc.). If a student takes tests in September, unprepared, and does poorly in math, okay in science, and great in history, that tells us that our pedagogical approach isn’t working well for this student in math, and that they might have an aptitude for history, both of which are actionable facts. If they’d taken those tests three months ago, the signals would be fainter and noisier—the student might be better at cramming for math tests than history tests, causing misleading grades.

In this way, long breaks and pop quizzes are educational assets, provided teachers are able to adapt to the information they provide.

On the other hand, this same model predicts that a long break might interfere with “true” learning. If depth and context are crucial to move from the middle tier to the best, studying something for two months straight is almost certainly more effective than if there’s a big gap in the middle. Surprise disruptions, like a pandemic lockdown, might be even worse. Teachers can plan a cohesive curriculum with an end date, but it’s harder to plan a curriculum that can survive being broken in two at any point.

Depth-First

In theory, we could get the best of both worlds by rearranging the order in which we learn subjects, without needing to futz with the breaks. Suppose we spent a year just learning math, then another just learning science, then another just learning history? We’d get more cohesion, and therefore better retention, and we’d have an opportunity to test true retention in time to adapt.

Or we would, except a good chunk of us would be miserable and burned out like a month into each year. Voluntary learning can be depth-first, because we’re learning something we’re into, but when learning is both mandatory and standardized, we’re inevitably going to be learning things we’re not into, a lot of the time, and that’s easier to swallow in smaller doses.

There’s no particular reason to expect this problem to have a great solution. How can we learn every subject in depth? We probably just can’t. If you’re not into math, and you learn it the standard way, you’ll forget it after you graduate. If you’re not into math, and you’re forced to do nothing but study math for a year, you will go insane. Schools can, and do, improve this on the margins, by compromising between the two approaches, futzing with breaks, and being more intentional about teaching interdisciplinary connections. But we still end up flushing half of what we eat, undigested, because it’s the wrong stuff for us at the wrong time.

At a certain point, even with a perfect pedagogy, we need to make a choice. That choice is not between depth and breadth. It’s between depth and the illusion of breadth. It’s between true learning and performative learning.

We typically choose…poorly.

Why is fake learning preferred over real?

I’m honestly still a little confused about how we got here. Why does New York State mandate that “all students should know and be able to do” tasks like “identify zeros of polynomials, including complex zeros of quadratic polynomials?” Like, sure, it’d be nice if we all knew that, and some people need to. But New York State legislators must know, from personal experience, that some people are never going to retain that information, and that those people can still go on to live happy and productive lives as New York State legislators. By the time we get to stuff like this, why is it not “all students should have the opportunity to learn?”

And why do we pay any attention to final exam scores?

I think some of it is that we’re too obsessed with fairness, both in opportunity and evaluation. Here’s an insightful bit from the New Yorker article I quoted at the top:

Cooper’s research did show, however, that shortening the summer break has some impact upon lower-income students—the same children whom he earlier found were most likely to be afflicted by summer learning loss.

…

Among children who had access to high-quality books that they were allowed to keep, and who had some choice in what they read rather than being obliged to stick to an assigned list, there were considerable and sometimes dramatic improvements in literacy. Rather than a summer slide, there was a summer ascent.

When we let kids read whatever they want, the gap between rich and poor widens. But public school, in its majestic equality, prevents rich and poor alike from reading what they’re interested in. If you see education as zero-sum, as a way of helping prepare kids to compete against each other as adults, then uniform fake learning is preferable to unequal real learning.

That’s a stereotypically leftist attitude. Conservatives tend to prefer a different kind of fairness—they see school itself as a competition. If study is self-directed, it’s like having one athlete riding a bike and the other on foot. How are we supposed to know who’s better? Who deserves to go to a better college, get a better degree, and a better job? And if we don’t spend years 6-18 in one long race, how are we ever going to learn grit and self-sufficiency?

I’m being snarky, but, sure, economic mobility is good. Both of these attitudes have their place. I’m just super skeptical that the best way to achieve these goals is to spend our entire childhoods on them. Human childhood exists for a reason, and these modern concepts ain’t it.

What if we had school every four years?

After three years, you’ve forgotten everything you were going to forget. Should we optimize for that? I’m not sure if I’m seriously proposing this, but I want to think it through. Suppose we kept our current educational model, but only a quarter of the time, and forever?

At 6, you’d go to kindergarten for a full year. Then you’d spend three years with the right to, essentially, a well-funded day-care with “enrichment opportunities.” No standards for outcomes, just a mandate that every parent gets free day care if they want it, and that kids in that day-care have access to educational toys, and ways of learning about things they think are cool. Then, at 10, you take some assessments, get placed in appropriate classes, and do a year of something in the general genre of standard first through fifth grade. And repeat. Ages 11-13, it’s more like a free optional day camp, ideally with some mini-apprenticeship opportunities—ways to explore possible jobs and earn money. Ages 15-17, it’s still optional day camp, but with more of a focus on internships. At 18, you do your fourth and final mandatory standardized year, with standardized tests at the end for institutions and employers who care about that.

After that, every fourth year (ages 22, 26, 30, etc.) you get a little support (a stipend, or subsidized community college, something like that) for any courses you’d like to take, and a cultural expectation that people won’t be totally focused on their careers at those ages.

We should probably do some rough check on the financials just to get a sense of feasibility. New York State spends about $30,000 per student per year, while a nice-looking summer day camp in Riverbank State Park costs $550 for five weeks, which implies a base cost of no more than $6000 to do that sort of thing year round. So replacing nine years of school with camp would free up $216,000 per child, or $24,000 per year, for optional enrichment activities. If we save some of that for those continuing education subsidies, with time discounting it looks to me like this works.1

But how much would we actually learn? Well, we did accidentally do an experiment recently where we massively disrupted schooling for three years. And the results are starting to come in. According to Harvard’s Center for Education Policy Research, in the year after COVID, students were “half a grade level in math achievement and a third of a grade level in reading achievement” behind where someone of their age would normally be. Since then, they’ve been gradually catching up. So three years of having school become essentially optional, with online classes on the honor system, and rampant “chronic absenteeism” even after schools physically reopened…and students learned, on average, about 2.5 years worth of math, according to our tests. (And learned faster afterwards, according to the same tests, but let’s ignore that for now.)

If we’d done that two more times, with somehow the same level of chaos, ill-preparedness, and background stress each time, our students would be graduating knowing an average of 1.5 fewer grade levels of math. Algebra I instead of Algebra II. According to New York State standards, that means the average 18-year-old will know how to find some of the zeroes of a polynomial, but not the ones with imaginary components. The future electrical engineers and quantum physicists, the people who will actually use complex roots, will probably still know how by then, but the average student won’t.

That’s the downside. The upside is that students will have spent most of their time doing the kind of learning that’s optimized for long-term retention, rather than short-term test results. The self-directed, curiosity-driven learning that defines childhood. And those four years of traditional schooling will be better suited to each student’s needs and aptitudes, because placement will be based on measured long-term retention. So instead of forgetting more than half of what they learn, students will retain almost all of it.

If, by dropping the last year and a half of K-12 education, you could double the value of every year that came before, without spending any extra tax dollars, that’d be a great deal! That’s an equivalent effect to this proposal, under fairly pessimistic assumptions. Everyone would grow up knowing more, much more, if they had only four years of standardized education as kids instead of thirteen.

Also, summer vacation would be three years long, with free day camp for as long as you want, which sounds awesome. And there’d be money left over for an activity you actually want to do, like free space camp or free theater classes. And then we get to do more school, studying whatever we want on our own terms, every four years for the rest of our lives.

All of the typical defenses of school apply to this model. Whatever values and grit we’re supposed to learn by being forced into competitive standardized education, we’d still get plenty of opportunity to learn them in our four years of that. Kids still get one year out of four “in the system,” for whatever safety and wellness benefits that brings. We’d still learn all the same basics. We’d still take the SAT at the end. We’d be better prepared for the job market, thanks to vocational training and work experience, and/or better prepared for universities thanks to experience in self-directed study.

I’m not saying this is the ideal system, but compared to our current one, it seems shockingly good.

How do we get there?

First of all—if you’re an upper-middle-class parent, you can kind of just do this for your kid today. It’ll cost you, compared to public school, which you’ll still be paying for no matter where your kid is, but if you can afford it, the main trick is going to be socialization. If almost everyone else your kid’s age is only available on weekends, in the summer, and after school, is that enough? Depends on the kid, I think. If you have the freedom to move around, spending three years in a place with low cost of living, then one year in a place with great public schools, can help.

But to make this option available to everybody, we’ll need it to be publicly funded, which means we need to change how we talk about learning. If we’re going around talking about “the problem of the summer slide” all the time, we’re never going to fix this. Here’s what we need to do:

Start talking about gaps (summers, COVID) as revealing poor quality education, rather than causing it. They are doing literally no harm: they’re decreasing test scores solely by making them more accurate.

Don’t put a lot of weight, as an educator or parent, on final exams. At best, they’re a way of tricking students into studying more, so maybe we shouldn’t eliminate them entirely. But the results don’t matter. The only measure of success that matters comes from retesting later, without prep.

Conversely, praise teachers and schools whose students retain knowledge after a long gap.

Hire based on ability, not education. And make sure it is, and stays, legal to do so.

Normalize different people knowing different things. We need that. That is how our entire civilization works. People need to know stuff, and it needs to be not all the same thing. If every student in the U.S. graduated high school knowing Algebra II, or Latin, or in what order all fifty states entered the Union, that would be a waste. That would be a sign that we have done something wrong. (It’d also be bad if none of them graduated knowing those things.)

If a student is burning out, they’re not really learning anything, no matter how good their grades are. So give them a break.

Okay, one more thing.

Finding the zeroes of a polynomial means solving an equation like one of these:

Because the product of two numbers is zero when, and only when, at least one of them is zero, the easiest way to solve these is often to express the left-hand side as a product of simpler expressions. For example,

So the zeroes of that polynomial are -2 and 2. Which makes sense—if you want x squared to equal 4, so that subtracting 4 leaves zero, you need the square root of 4. And the negative square root works too, because a negative times a negative is a positive.

But what if you needed x squared to be negative, like for this innocent-looking problem?

You need a square root of -1. But any number squared is positive. So we need to invent new numbers that work differently. This is math, so we can just…do that. We define i to mean the square root of negative one, and so now it’s a zero of the polynomial. All multiples of i are referred to, playfully, as “imaginary” numbers, while the numbers we’re more used to are the “real” numbers. A number that can be either kind, or the sum of a real and an imaginary number, is called a complex number. So that’s what a complex zero of a polynomial is. One nice thing is that once you’ve added in i, it doesn’t create any new kinds of unsolvable algebra problems the way adding in zero or negative numbers did. So the complex numbers are referred to as the “algebraic closure” of the real numbers. And that’s why they’re useful, occasionally—you can do algebra all day and not get stuck.

(Despite the name, an imaginary number is just as “real” as the number 2. Which is to say, not very real, but real enough to be useful when talking about real things.)

I pick on math because it’s my favorite subject. I sincerely wish more people knew more math. I majored in math in college, basically by accident, because at a liberal arts college, taking one math class every semester makes you a math major, and I was definitely not going to stop studying math. Taught properly, it’s way too much fun. But part of teaching it properly is to abandon the notion that it’s a Vital Citizenship Skill. It breaks my heart whenever somebody tells me they always hated math. That’s not math’s fault. You don’t hate a subject just because it’s not your thing. You hate something because your education forced it on you in a pointless and humiliating way. People are coming into school with zero math expertise and leaving with negative math expertise.

Math! Let’s say that out of that $24K a year saved by not having regular classes, we give you $6K a year to go to programs like CTY or Space Camp. That leaves $162,000 to invest for your continuing education. Using the rule of thumb that a 4% withdrawal rate is sustainable, we can withdraw 16% of that every four years, meaning you’d get at least $26,000 to help pay for classes and/or take time off from work. (This is simplifying a lot, but mostly in ways that underestimate the benefits.)

Great piece! Love the concept. As a semi-relevant case study this is kind of how I treated ages 15-19. Skipped a fair bit of school, read a lot of what I wanted and none of what I didn't want, worked and played sports. Then I took a full year of natural sciences at 19 that made up for all my disability to show up or show interest in school the three years before that. I cannot say that my ability is less because of that :)